Are You Ready to Witness the Future of Data Security?

Platform

©2026 QuNu Labs Private Limited, All Rights Reserved.

Imagine this - you, me, the stars, and all cosmic bodies are actors on a grand stage. That stage is built fromtime and space, where interactions unfold. Time isn’t just a number on a clock—it silently directs how wemove, change, and perceive everything.

For centuries, philosophers and scientists have tried to understand this ever-flowing, irreversibledimension. Unlike other physical quantities, time doesn’t rewind. It moves forward, unaffected by anyforce, yet shaping all interactions.

Measuring time has been one of humanity’s greatest challenges - and triumphs. Time measurement is a systematic practice or process of quantifying the passage of time through counting repeated phenomena and dividing intervals into smaller, measurable units such as hours, minutes and seconds.

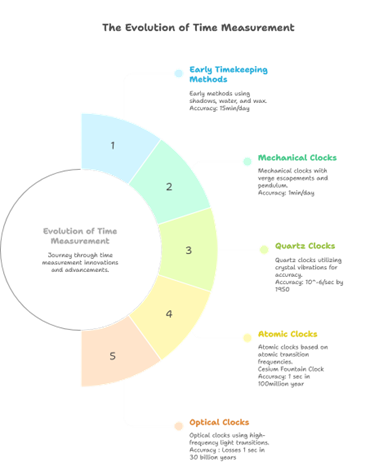

We’ll explore how time measurement evolved - from sundials to atomic and quantum clocks.

Think about the technology around you. Smartphones syncing messages, GPS guiding travel, power grids running smoothly, and even ultra-secure quantum communication - all depend on precise timing.

Without accurate timekeeping, our interconnected world would break down. This basic need for synchronization has driven research for centuries.

Modern life runs on precise time, far beyond just being punctual.

Early methods showed creativity, but struggled with accuracy and reliability.(Reference 1)

By the 14th century, Europe saw the rise of mechanical clocks. These were powered by falling weights and regulated by the verge-and-foliot escapement. The foliot, a horizontal bar with small weights, swung back and forth, letting the gear train move forward in tiny jumps.

While revolutionary, they were highly inaccurate, often losing or gaining more than an hour a day. The Salisbury Cathedral Clock (1386) still stands as a reminder of this milestone.

Mechanical clocks brought progress, but accuracy was still far away.

In the late 16th century, Galileo observed that pendulum swings are consistent for small arcs. Building on this, Christiaan Huygens patented the first pendulum clock in 1656.

The pendulum replaced the erratic foliot, improving accuracy to about 1 minute per day. By the 1920s, advanced designs like the Shortt free-pendulum clock achieved near 1 second per year precision.

The pendulum made clocks truly reliable, transforming science and daily life.

The 20th century introduced quartz clocks. When an electric current is applied, quartz vibrates at a stable frequency, acting like a natural tuning fork.

First built in the 1920s, quartz clocks quickly became popular. By the 1930s, they reached 0.2 seconds per day accuracy. World War II accelerated development, and soon quartz-powered watches, radios, and electronics.

Still, quartz crystals age and drift slightly with temperature. By the 1950s, they achieved accuracy of about 1 part per million.(Reference 2)

Quartz brought compact, affordable, and highly accurate time to everyone.

Scientists realized atoms could serve as perfect clocks. Atoms have fixed, identical energy transitions. When electrons jump between levels, they emit radiation at exact, unchanging frequencies. This became the basis of atomic clocks and shows how quantum physics lies at the heart of modern timekeeping.

It’s like tuning a guitar with an electronic tuner: the string (oscillator) is constantly corrected until it perfectly matches the reference pitch (the atom).

Isidor Isaac Rabi’s magnetic resonance technique (1930s) made this possible and earned him the Nobel Prize in 1944. The first atomic clock in 1949 used ammonia but wasn’t much better than quartz. Atoms offered a natural, universal standard for measuring time.

A New Standard Cesium-133 emerged as the ideal atom.Its transition frequency (9,192,631,770 Hz) matched microwave technology perfectly. But atomic beams caused broadening due to the atom motion, reducing precision.

Norman Ramsey solved this in 1949 with the separated oscillatory fields method-two microwave bursts separated by drift. This sharpened signals and led to the first practical cesium clock in 1955 by Louis Essen.

Accuracy reached 1 part in 10⁹, drifting only 0.1 milliseconds per day. By 1967, the SI second was officially redefined based on cesium. (cesium standard )

Cesium clocks set the global standard for timekeeping.

To boost accuracy, atoms needed more interaction time. Laser cooling (1980s) slowed cesium atoms to near standstill—temperatures just millionths above absolute zero.

This enabled cesium fountain clocks. Cold atoms are tossed upward, pass twice through a microwave cavity, and interact longer. NIST-F1 (1990s) achieved accuracies of 3 parts in 10^16—losing less than one second in 100 million years.

Cooling atoms unlocked astonishing precision in atomic clocks.

Cesium fountains are precise, but the future lies in optical clocks. These use visible light transitions in atoms like strontium or ytterbium, with frequencies hundreds of thousands of times higher than microwaves.

Like using a ruler with finer divisions, higher frequency means greater accuracy. Optical lattice clocks already surpass cesium fountains, reaching uncertainties of a few parts in 10^18.

Optical clocks could redefine the second and open new frontiers.

Nuclear clocks measure transitions inside an atom’s nucleus, making them less affected by outside forces than optical clocks. A leading candidate is thorium-229, which could push accuracy even further and enable new physics discoveries.(Reference 8)

Nuclear clocks may one day outshine even today’s best optical clocks.

Why do we need clocks accurate to 10^18? It’s not just about precision for its own sake—it’s about powering real technologies. One key example is quantum key distribution (QKD), used for ultra-secure communication.

In QKD, two users (often called Alice and Bob) rely on perfectly synchronized clocks to detect and share photons. Even the smallest timing error can make them miss photons or introduce noise, weakening security. With quartz clocks, resynchronization must happen every few milliseconds.

For instance, with quartz clocks (accuracy ~10⁻⁷) and a 100 MHz photon rate, synchronization must happen every 25 milliseconds just to maintain reliability.[Reference 5]

Atomic clocks solve this. Their extreme accuracy keeps Alice and Bob synchronized for much longer, making QKD faster, more reliable, and more secure. This is especially critical for satellite QKD, where signals are weak and conditions are noisy.

But there's more. Cutting-edge research shows that networks of entangled optical atomic clocks—where clocks share quantum entanglement across distances—can surpass classical precision limits, known as the Standard Quantum Limit, pushing accuracy even further.(Reference 8)

Without atomic clocks, practical quantum communication would struggle to work at scale.

From sundials to atoms chilled near absolute zero, humanity’s journey in measuring time reflects our endless curiosity. Each step—mechanical, quartz, atomic, and now optical clocks—was not just about better accuracy, but also about uncovering deeper truths about the universe. Today, the second is no longer tied to Earth’s rotation but to the quantum “heartbeat” of cesium atoms, and soon, perhaps, to the oscillations of light or even atomic nuclei.

Is there a limit to how precisely we can measure time? Physicists suggest the ultimate boundary is the Planck Time (~10⁻⁴⁴ seconds)—the time it takes light to travel one Planck Length, the smallest possible distance in quantum physics. Reaching that scale would require a unified theory combining General Relativity and Quantum Mechanics, often imagined through ideas like String Theory or Loop Quantum Gravity. The Planck time marks the very first tick of the universe, possibly right after the Big Bang.

If history has taught us anything, it's that our curiosity always leads us to discoveries we can't even imagine today.

The quest for the perfect tick continues—perhaps until we measure the very first.